sotonDH Small Grants: Investigation into Synthesizer Parameter Mapping and Interaction for Sound Design Purposes – Post 3

sotonDH Small Grants: Investigation into Synthesizer Parameter Mapping and Interaction for Sound Design Purposes – Post 3 by Darrell Gibson

Research Group: Composition and Music Technology

Introduction

In the previous blog posts three research questions and supporting literature were presented in relationship to how synthesizers are used for sound design. With these in mind the next stage has been to consider how the presented research questions can begin to be evaluated and answered. Inline with these, it is proposed that the primary focus for this investigation will be on the synthesizer programming requirements of sound designers. These professionals tend to have extensive experience in this are so when undertaking a sound creation task, they are likely to have concrete ideas for the sound elements that they require or the direction they want to take. Although techniques have been proposed that allow interpolation to be performed between different parameter sets, the sets themselves need to be first defined by the sound designer. This could be done using some form of resynthesis if the sound designer can supply “targets” for the texture that they require. This task would ideally be “unsupervised” so that the sound designers would not have to spend time refining the synthesis generation and it would be essentially be a automatic process.

Having defined the sound textures that the designer wishes to use in a particular creation, the mapping of the parameters will then need to be considered. This is a vital area, as the mapping will need to be done in a way that permits the sound space to be explored in a logical and intuitive manner. This sound space maybe either a representation of the parameter space or timbre space if more perceptual mappings are being given. The actual mapping process could be done automatically or might be user defined. In addition, the interpolation systems developed so far offer straight interpolation between the parameters of the target sounds. Whereas, when sound designers work they will often apply different forms of manipulation to the sound textures they are using, such as: layering, flipping, reversing, time stretching, pitch shifting, etc. As a result, there would be an obvious advantage to an interpolation system that allowed not only the exploration of the available sound space, but also allowed more flexible manipulation of the sound textures. Ideally this programming interface will be independent of the actually synthesis engine so that it can be used with any form of synthesis and will mask the underlying architecture from the user. This will allow the sound space of different engines to be explored with the same interface without having to worry about the underlying implementation. In order to do this successfully a suitable graphical interface will need to be created that allows the sound space to be explored in an intuitive way, whilst masking the underlying detail.

Multi-Touch Screen Technology

Tablet computers that offer mobile multi-touch functionality, such as the Apple iPad, have become invasive in modern society, particularly for content delivery. However, they have been less commonly used for content creation [1] and this is especially true in the area of Sound Design. Recent software application releases for these devices [2], [3], [4], and new audio/MIDI interfaces [5], [6], mean that this technology can now start to be used for content creation in this area. This then poses some interesting new research questions, such as: Can multi-touch computers offer a viable interface for synthesizer programming and sound design? Will mobile technologies allow content creation to be performed collaboratively between multiple parties in different locations?

To answer these questions and give context to the previous research questions it is proposed that a SYTER style [7] interpolation system has been developed. When functional testing of this system has been completed, a secondary systems will be created that will allow the remote control of the interpolation, via the iPad. This will offer a couple of interesting areas for consideration: When implemented with a traditional mouse and screen for the control of interpolation, only one point can be moved at a time, whereas potentially with the iPad, multiple points can be controlled simultaneously. In addition, as it is intended that the tablet device is purely used for control purposes, potentially a number of users will be able control the interpolation at the same time, opening up the possibility of collaborative sound design. These are exciting prospects and ones warrant further investigation.

Work Completed

The synthesis engine and interpolation has been realised using the Max/MSP visual programming environment [8], which offers a relatively fast development with many standard building blocks. For testing purposes, the idea is to use the interpolation system with a variety of different synthesis engines. Fortunately Max/MSP does come with several pre-built synthesis engines so the interpolation interface has been designed to connect directly to the engines available.

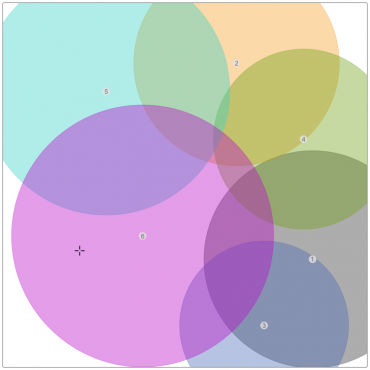

The interface presented uses the same paradigm as used with the original SYTER Interpol system, where the each sound, defined by a set of synthesizer parameters, is represented as a circle in the interpolation space [7]. An example of the created interpolation space is shown in Figure 1, where interpolation is being performed between six sounds.

The diameter of each circle defines the “strength” that each sound will posses in the gravitational model. Then as the user moves the crosshairs a smooth interpolation is performed between the sounds that each circle represents. This is achieved by performing a linear interpolation between pairs of parameters defined by the circles. In this way, new sounds can be identified by the sound designer or the space can be used during a performance as a mechanism for creating morphing sounds.

Further Work

The synthesis engine and the controlling interpolator have been realised on a laptop computer that is running the Max/MSP software. The next stage will be to implement the mobile multi-touch interface on the iPad. It is intended that this will be done using the Fantastic application [9], which allows drawing commands to be sent to the iPad from Max/MSP, via a WiFi network. In this way, it should be possible to give a representation of the interpolator on the iPad that can then be synchronised with the software running on the computer. The user will then be able to use the multi-touch functionality of the iPad to control the interpolator.

When the system is working and functional testing has been completed then usability testing will be performed to try and answer the previously presented research questions. This will take the form of giving the participants specific sound design tasks so that the quantitative results can be derived against the “target” sounds. The tests are likely to take the form of asking the participants to complete three separate tests: First the participants will be asked to create a target sound directly with the synthesis engine, without the interpolated control. Before doing this the participants will first be allowed to explore the synthesizer’s parameters and will then be supplied with the target sound and asked to try replicate the sound. In the second test the participant will be supplied with the same synthesis engine, but this time being controlled by the interpolation interface. However, interaction with the interpolator will be by the use of a traditional computer mouse or trackpad and a standard computer monitor screen. Again the participant will first be asked to explore the parameter space, this time defined by an interpolation space and they will then be supplied with a target sound and again asked to try replicate the target sound, but this time using the interpolator to control the synthesis engine. In the final test the same interpolator and synthesis engine will be used except that this time the multi-touch screen of the iPad will be as the input device.

For all three of these tests the performance will be assessed through the amount of time it takes to create the target sound and the accuracy with which the sound created. In addition to this quantitative data, qualitative results will also be gathered following these tests through the use of questionnaires and focus groups. It is intended that the generated data will be analysed using a combination of Grounded Theory and Distributed Cognition approach [10]. When this has been completed and based on the results obtained a second round of usability testing will be design, which will allows the exploration of using multiple iPads for collaborative sound design.

It is anticipate that building and functional testing of the iPad-based interpolator will take another two months. Then the usability testing and evaluation will probably require another six months work.

References

- Hendrik Müller, Jennifer L. Gove & John S. Webb, Understanding Tablet Use: A Multi-Method Exploration, Proceedings of the 14th Conference on Human-Computer Interaction with Mobile Devices and Services (Mobile HCI 2012), ACM, 2012.

- https://itunes.apple.com/us/app/cubasis/id583976519?mt=8, Accessed 10/01/13.

- https://itunes.apple.com/ca/app/bloom-hd/id373957864?mt=8, Accessed 10/01/13.

- https://itunes.apple.com/gb/app/curtis-for-ipad/id384228003?mt=8, Accessed 10/01/13.

- http://www.alesis.com/iodock, Accessed 10/01/13.

- http://www.musicradar.com/gear/guitars/computers-software/peripherals/input-devices/audio-interfaces/iu2-562042, Accessed 10/01/13

- Allouis, J., and Bernier, J. Y. The SYTER project: Sound processor design and software overview. In Proceedings of the 1982 International Computer Music Conference (ICMC), 232–240, 1982.

- Manzo, V. J., Max/MSP/Jitter for Music: A Practical Guide to Developing Interactive Music Systems for Education and More. Oxford University Press, 2011.

- https://itunes.apple.com/gb/app/fantastick/id302266079?mt=8, Accessed 10/01/13.

- Rogers, Y., H. Sharp & J. Preece, Interaction Design: Beyond Human-Computer Interaction, 3rd Edition. John Wiley & Sons, 2011.